History and Development

Recurrent neural networks (RNNs) date back several decades, but until the 1990s they struggled to learn long-term dependencies. A fundamental limitation was the “vanishing gradient” problem: as an RNN tries to learn patterns over many time steps, the feedback signals used for learning fade exponentially with each step, making it nearly impossible to capture long-range connections (Bengio, Simard, & Frasconi, 1994). In 1997, a breakthrough came in the form of the Long Short-Term Memory (LSTM) network, invented by Sepp Hochreiter and Jürgen Schmidhuber. Their LSTM architecture was explicitly designed to overcome RNNs’ chronic forgetfulness by introducing a novel memory cell mechanism (Hochreiter & Schmidhuber, 1997). This allowed neural networks to retain information over far longer sequences than was previously possible, effectively solving a fundamental limitation of earlier RNNs.

Following its initial proposal, LSTM underwent refinements and steadily gained recognition. An important enhancement was the addition of a “forget gate” to the design (Gers, Schmidhuber, & Cummins, 2000), allowing the model to learn when to reset its memory cell and clear out irrelevant information. Such improvements, along with increasing computing power in the 2000s, gradually made LSTMs viable for real-world tasks. By the 2010s, LSTM networks were driving notable breakthroughs in machine learning. They powered major advances in speech recognition and formed the backbone of early neural machine translation systems, enabling algorithms to handle entire sentences with improved context retention. This period cemented LSTM’s reputation as a workhorse for sequence modeling.

Theoretical Underpinnings

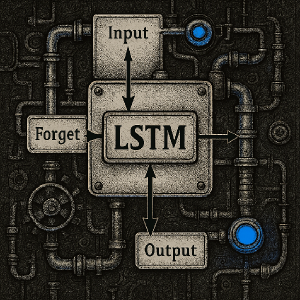

At the core of an LSTM network is the idea of a memory cell that preserves information over time, coupled with gating mechanisms that regulate the flow of information. Each LSTM cell has three types of gates—input, output, and forget—which act like valves on the cell’s information pipeline. The input gate controls how much of the new data should be added to the cell’s state, the forget gate decides what old information to erase, and the output gate determines how much of the cell’s state to pass on to the next step. Through these gates, the network learns what to store, what to discard, and when to make stored information available. This design means that, unlike a traditional RNN that overwrites its hidden state at every step, an LSTM can selectively retain important signals and prevent them from being washed out by subsequent inputs (Hochreiter & Schmidhuber, 1997; Gers et al., 2000).

The clever engineering of LSTM cells directly addresses the vanishing gradient problem that bedeviled earlier RNNs. By design, an LSTM cell can maintain a constant error signal over time—its internal cell state can carry information forward largely unchanged if the gates permit, allowing gradients (the signals that drive learning) to remain intact over long sequences. In practice, this architecture lets LSTMs capture long-range dependencies that standard RNNs would simply forget. As a result, the introduction of LSTM marked a turning point, opening the door for neural networks to learn from long-term context (Bengio et al., 1994). The math underpinning these developments is below:

Long Short-Term Memory (LSTM) Cell Equations

At each time step \(t\), the LSTM processes an input vector \(x_t\), maintains a cell state \(C_t\), and produces a hidden state \(h_t\). The gates control how information flows through the cell.

Forget Gate

\[ f_t = \sigma(W_f x_t + U_f h_{t-1} + b_f) \]

Input Gate

\[ i_t = \sigma(W_i x_t + U_i h_{t-1} + b_i) \]

Candidate Cell State

\[ \tilde{C}_t = \tanh(W_C x_t + U_C h_{t-1} + b_C) \]

Cell State Update

\[ C_t = f_t \odot C_{t-1} + i_t \odot \tilde{C}_t \]

Output Gate

\[ o_t = \sigma(W_o x_t + U_o h_{t-1} + b_o) \]

Notation

- \(x_t\): Input vector at time step

\(t\)

- \(h_{t-1}\): Previous hidden

state

- \(C_{t-1}\): Previous cell

state

- \(\sigma\): Sigmoid activation

function

- \(\tanh\): Hyperbolic tangent

activation function

- \(\odot\): Element-wise (Hadamard)

product

- \(W_*\), \(U_*\), \(b_*\): Weight matrices and bias vectors for each gate

Modern Applications in Open-Source Intelligence

In today’s data landscape, information flows continuously from news and social media, creating a need to detect temporal patterns and anomalies—a classic open-source intelligence (OSINT) challenge. LSTM networks have proven to be adept allies in this arena. Thanks to their memory for sequences, LSTMs can track how stories or trends evolve over time, making them well-suited to notice subtle changes. For instance, an LSTM-based model might ingest a stream of tweets or news articles and flag when the usual pattern shifts—perhaps an unusual surge in mentions of a topic or a sudden change in sentiment. This ability to model time series of text or events has made LSTMs a go-to tool for analysts monitoring social networks and news for emerging events.

Recent research highlights LSTM’s value for OSINT tasks. For example, LSTM models have been built to anticipate viral surges on social media (Bidoki, Mantzaris, & Sukthankkar, 2019), to flag early signs of civil unrest from online chatter (Macis, Tagliapietra, Meo, & Pisano, 2024), and to chart the evolution of public opinion during fast-moving crises (Mu et al., 2023). In short, LSTMs have become invaluable tools for sifting through streams of temporal data to catch meaningful changes hidden in the noise.

Future Directions

Despite the buzz surrounding newer architectures, LSTMs continue to evolve and find new roles, often in combination with other techniques. One promising direction is the integration of LSTMs with reinforcement learning (RL) systems. In complex decision-making environments—such as robotics or strategy games—agents need a memory of past events to handle partially observable situations. By embedding an LSTM into an RL agent, the agent gains the ability to remember previous observations, enabling more informed decisions. This synergy has already been demonstrated: DeepMind’s AlphaStar, a program that mastered the real-time strategy game StarCraft II, utilized an LSTM module to maintain an internal memory of the game’s progression (Vinyals et al., 2019). The LSTM allowed the AI to infer hidden information from historical observations. Going forward, we can expect more reinforcement learning algorithms to incorporate LSTM-like memory units so that AI agents can plan and act with a better sense of temporal context.

Another frontier for LSTMs lies in combining them with ensemble methods and hybrid models. Ensemble learning—where multiple models are combined to improve performance—has now been applied to deep networks. Instead of relying on a single LSTM, researchers are using collections of LSTMs to vote on outcomes or to specialize in different aspects of a problem (Mohammed & Kora, 2023). This approach tends to yield more robust and accurate results, as averaging across diverse models can cancel out individual quirks or errors. For instance, training several LSTMs on different subsets of data and then averaging their predictions often outperforms any one model alone. Hybrid designs are also on the rise. In one study, an LSTM was augmented with an evolutionary algorithm to better predict social media trends, illustrating the creative ways neural memory can be enhanced by other techniques (Mu et al., 2023). Even as the Transformer architecture steals the spotlight in AI, LSTMs are finding new life as part of these complex systems. By teaming up with reinforcement learning and ensemble methods, these memory-imbued networks are poised to remain key players in the quest to build machines that not only learn and reason, but also remember. References

References

Macis, L., Tagliapietra, M., Meo, R., & Pisano, P. (2024). Breaking the trend: Anomaly detection models for early warning of socio-political unrest. Technological Forecasting and Social Change, 206, 123495.

Vinyals, O., Babuschkin, I., Czarnecki, W. M., et al. (2019). Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature, 575(7782), 350-354.