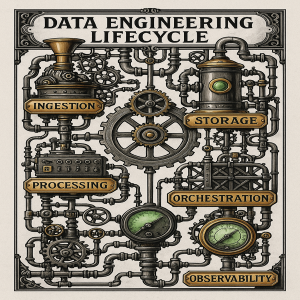

The Data Engineering Lifecycle: Building the Modern Enterprise

In an age when data has displaced oil as the world’s most valuable resource, the sophistication with which organisations handle data defines their survival. The data engineering lifecycle—a term once confined to technical manuals—now demands the attention of every boardroom. From ingestion to observability, each stage represents a potential source of either competitive advantage or costly vulnerability.

Ingestion: Capturing the Torrent

The lifecycle begins with ingestion. Enterprises today are inundated with torrents of data from customer interactions, mobile devices, operational systems, and external feeds (García-Molina, Ullman, & Widom, 2008). Success hinges on breadth: structured and unstructured data, high-velocity streams, and legacy batch uploads must all be accommodated. The architecture must be resilient yet flexible, capable of scaling horizontally without buckling under sudden spikes.

Apache Kafka and other real-time systems have become de facto standards for handling ingestion at scale, allowing businesses to ingest millions of events per second reliably (Kreps, Narkhede, & Rao, 2011).

Processing: Refining the Raw Material

Once data is captured, it must be processed—cleaned, enriched, and validated to ensure it is fit for decision-making. Without processing, enterprises risk making strategic decisions based on corrupted or incomplete information (Abiteboul, Hull, & Vianu, 1995).

Advances in distributed systems, such as Apache Spark and Flink, now enable near-real-time processing of massive datasets, drastically reducing latency from ingestion to insight (Zaharia et al., 2016). Processing transforms data from a chaotic liability into a strategic asset.

Storage: Balancing Speed and Scale

Storage strategies must align with the enterprise’s broader ambitions. Legacy relational databases are ill-suited to the demands of petabyte-scale data lakes and warehouses. Modern data engineering employs a blend of cloud-native storage solutions—combining object stores like Amazon S3 with high-performance warehouses like Snowflake and Databricks Lakehouse architectures (Armbrust et al., 2021).

Efficient storage is not simply about cost reduction. It enables faster queries, supports richer analytics, and provides the foundation for machine learning initiatives.

Orchestration: Automating the Complex

In a modern enterprise, data must traverse complex workflows, combining feeds from multiple sources, undergoing numerous transformations, and triggering business processes automatically. Manual handling is both impractical and dangerous.

Orchestration tools such as Apache Airflow and Dagster now serve as the control planes for these workflows, ensuring reliability, traceability, and resilience (Crankshaw et al., 2015). Automation enables real-time data products and services that customers increasingly demand.

Observability: Trust but Verify

Observability is the final, critical stage. Without visibility into data pipelines, enterprises are flying blind. Observability encompasses metrics, tracing, logging, and anomaly detection—providing early warnings when things go wrong (Sigelman et al., 2010).

Strong observability is not only about operational efficiency; it is also fundamental for regulatory compliance under regimes such as GDPR and CCPA. It ensures that data lineage is auditable and that issues are addressed before they become business risks.

Why the Lifecycle Matters: Beyond IT

Each stage of the lifecycle confers a strategic benefit—or, if mismanaged, a strategic liability. Data lost during ingestion is permanently gone. Flawed processing yields misleading analytics. Poor storage inflates costs. Failed orchestration slows time-to-insight. Weak observability leaves enterprises vulnerable to both internal and external threats.

Moreover, companies that master the data lifecycle gain agility: the ability to personalize services, predict market shifts, and optimize operations in real time. This is the true competitive advantage in the digital economy.

Data Engineering as Strategic Infrastructure

C-suite leaders often mistake data engineering as a technical cost centre. This is a grave error. Properly executed, data engineering underpins revenue generation, innovation, and resilience.

Consider Amazon, Netflix, and Tesla: firms that excel not because of superior instincts but because of superior data pipelines. Their competitive advantage compounds over time, as their models become smarter, their operations more efficient, and their customer engagement more personalized (Davenport & Redman, 2020).

Building for the Future

Future data challenges will only become more formidable. Edge computing, AI model training, and the proliferation of IoT devices are fragmenting the data landscape. Enterprises must prepare now for an era of exabyte-scale, decentralized data ecosystems (Gubbi et al., 2013).

The tools, thankfully, are improving. Open-source ecosystems flourish; cloud providers offer scalable, integrated services; best practices are increasingly codified.

But strategy must lead technology. Data engineering must be seen not as a discrete function, but as the circulatory system of the modern enterprise. Those who master the lifecycle will thrive. Those who neglect it will be swept away.

References

Abiteboul, S., Hull, R., & Vianu, V. (1995). Foundations of Databases. Addison-Wesley.

Armbrust, M., Das, T., Zhu, X., Xin, R. S., et al. (2021). Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores. Proceedings of the VLDB Endowment, 13(12), 3411–3424.

Crankshaw, D., Xin, R. S., Franklin, M. J., Gonzalez, J. E., & Stoica, I. (2015). The Missing Piece in Complex Analytics: Low Latency, Scalable Model Management and Serving with Velox. CIDR 2015.

Davenport, T. H., & Redman, T. C. (2020). Data as an Asset: New Rules for Organizations. MIT Sloan Management Review, 61(4), 1–9.

García-Molina, H., Ullman, J. D., & Widom, J. (2008). Database Systems: The Complete Book (2nd ed.). Pearson.

Gubbi, J., Buyya, R., Marusic, S., & Palaniswami, M. (2013). Internet of Things (IoT): A Vision, Architectural Elements, and Future Directions. Future Generation Computer Systems, 29(7), 1645–1660.

Kleppmann, M. (2017). Designing Data-Intensive Applications: The Big Ideas Behind Reliable, Scalable, and Maintainable Systems. O’Reilly Media.

Kreps, J., Narkhede, N., & Rao, J. (2011). Kafka: A Distributed Messaging System for Log Processing. Proceedings of the NetDB, 1–7.

Sigelman, B. H., Barroso, L. A., Burrows, M., Stephenson, P., et al. (2010). Dapper, a Large-Scale Distributed Systems Tracing Infrastructure. Google Research Publications.

Stonebraker, M., & Çetintemel, U. (2005). “One Size Fits All”: An Idea Whose Time Has Come and Gone. Proceedings of the 21st International Conference on Data Engineering, 2–11.

Zaharia, M., Chowdhury, M., Franklin, M. J., Shenker, S., & Stoica, I. (2016). Apache Spark: A Unified Engine for Big Data Processing. Communications of the ACM, 59(11), 56–65.